Compare mysql vs postgres: A Clear Guide to Choosing the Right DB

Explore mysql vs postgres with real-world scenarios, performance insights, and practical guidance to pick the best DB for your project.

When you're standing at the crossroads of MySQL vs Postgres, the "right" choice isn't about which database is universally better—it's about which one is the right tool for the job you have right now. If your world revolves around high-speed writes and straightforward reliability, like for a bustling e-commerce site or a popular blog, MySQL is often the go-to. But if you're building something that needs to handle complex queries, guarantee rock-solid data integrity, and maybe even grow in unexpected ways, you'll want to lean toward Postgres.

Making the Right Database Choice

Picking between MySQL and PostgreSQL is one of those foundational decisions that can shape a project's entire lifecycle. Both are titans in the open-source relational database world, backed by decades of battle-tested performance. The key difference, though, lies in their core design philosophies, which makes them excel in very different scenarios.

This guide is designed to cut through the noise and give you practical, real-world insights. We're going to dig into their architecture, compare critical features, and look at how they perform under pressure to help you make a decision that you'll be happy with for years to come. Let's start with a high-level look before we get into the nitty-gritty.

Quick Comparison: MySQL vs. Postgres at a Glance

To get a quick feel for how these two databases stack up, this table summarizes their core philosophies and best-fit use cases. Think of it as your cheat sheet for the initial gut check.

| Attribute | MySQL | PostgreSQL |

|---|---|---|

| Core Philosophy | Speed, simplicity, and reliability | Extensibility, data integrity, and standards compliance |

| Primary Use Case | High-throughput web applications, read-heavy systems | Complex analytical queries, geospatial data, data warehousing |

| Data Types | Standard SQL types with a solid JSON implementation | Rich set of data types, including arrays, hstore, and advanced JSONB |

| Concurrency | Thread-per-connection model, efficient for many connections | Process-per-connection model, often used with a pooler |

| Extensibility | Pluggable storage engines (InnoDB is standard) | A rich ecosystem of extensions like PostGIS and pgvector |

As you can see, the debate isn't about which one is "better" in a vacuum. The real question is always, "Which one is better for my workload?" A WordPress site, for example, is a perfect match for MySQL's knack for fast, simple reads and writes. On the other hand, an application doing complex financial modeling will benefit immensely from PostgreSQL's strict ACID compliance and powerful analytical functions.

This choice is also playing out in the broader developer community. According to the 2025 Stack Overflow Developer Survey, PostgreSQL has decisively pulled ahead, favored by 55.6% of professional developers. MySQL now sits at 40.5%, reflecting a clear trend where developers are increasingly reaching for Postgres for new, modern applications.

No matter which path you take, you're not locked into a single ecosystem of tools. A flexible client like the TableOne cross-platform database GUI can smooth out your workflow by giving your team a consistent, modern interface for interacting with either database.

Understanding Their Origins and Core Philosophies

To get to the heart of the MySQL vs. Postgres debate, you have to go beyond a simple feature checklist. You need to look at their DNA. Each database was born with a different purpose, and those founding principles still shape their behavior, strengths, and weaknesses today. This isn't just a history lesson; it's the key to understanding why one might be a perfect fit for your project while the other could be a constant source of friction.

MySQL's story kicked off in 1995 with a very clear mission: be fast, reliable, and easy to use for the new and rapidly growing web. This practical philosophy made it the database of choice for the original LAMP stack (Linux, Apache, MySQL, PHP), becoming the engine behind countless websites, blogs, and early e-commerce platforms. The design deliberately favored high-speed reads and writes over rigid, academic adherence to SQL standards.

This focus on speed over strictness has real-world consequences. For example, MySQL has traditionally been more lenient with data types. If you tried to insert a string into a VARCHAR(50) column that was too long, older versions would often just truncate it and move on. PostgreSQL, on the other hand, would throw an error and reject the data. While modern MySQL is much stricter now, that "just get the job done" attitude is baked into its legacy.

MySQL: Built for Speed and Simplicity

Think about a typical web application like a CMS or a social media feed. These apps are all about a huge volume of simple, repetitive tasks—users logging in, posts being viewed, comments being added. MySQL’s architecture, especially with its default InnoDB storage engine, is optimized for exactly these kinds of read-heavy and write-intensive workloads. Its thread-per-connection model excels at juggling lots of concurrent users doing straightforward things.

Key Takeaway: MySQL’s philosophy is pure web pragmatism. It was built to be a workhorse that's easy to set up and scale for high-volume applications where performance and availability are the top priorities.

PostgreSQL: Data Integrity and Extensibility Above All

PostgreSQL comes from a completely different world. It began as the Ingres project at the University of California, Berkeley, back in 1986. Its core principles from day one were standards compliance, data integrity, and extensibility. The goal was, and still is, to be "the world's most advanced open source relational database."

You can see this commitment everywhere. It rigorously follows the SQL standard and is built on a powerful object-relational model. PostgreSQL is absolutely uncompromising when it comes to data integrity. For instance, it fully supports transactional DDL. This means you can wrap statements like CREATE TABLE or ALTER TABLE inside a transaction. If any part of your database migration script fails, the entire thing gets rolled back, leaving your schema untouched—a feature that can be a lifesaver during complex deployments.

This rigorous approach defines its feature set. PostgreSQL comes with a much richer set of built-in data types, like arrays, native JSONB (a binary, indexed JSON), and powerful geospatial types through the PostGIS extension. Extensibility isn't an afterthought; it's a core design principle, allowing it to evolve for use cases its creators never even imagined.

Ultimately, the choice often boils down to this fundamental trade-off: do you need the raw speed and operational simplicity of MySQL, or the robust data integrity and feature-rich, extensible world of PostgreSQL?

A Practical Feature and Architecture Comparison

When you get down into the weeds, the choice between MySQL and Postgres often hinges on specific architectural features that directly impact how you build modern applications. It’s not just about their core philosophies; it’s about how each one handles complex data, indexing, and concurrency. These differences have very real, practical consequences for your project's performance and ability to scale.

Let's move beyond the high-level talk and get into a detailed, feature-by-feature breakdown. We’ll dig into four critical areas with real-world scenarios to show not just what is different, but why it matters for your team.

Advanced Feature Showdown: MySQL vs. Postgres

For developers building complex, data-intensive applications, certain features are non-negotiable. The table below dives into the specifics of how MySQL and Postgres handle JSON data, specialized indexing, and their unique approaches to extensibility. This isn't just a feature checklist; it's a look at the practical implications behind each implementation.

| Feature | MySQL Implementation & Practical Use | PostgreSQL Implementation & Practical Use | Winner For... |

|---|---|---|---|

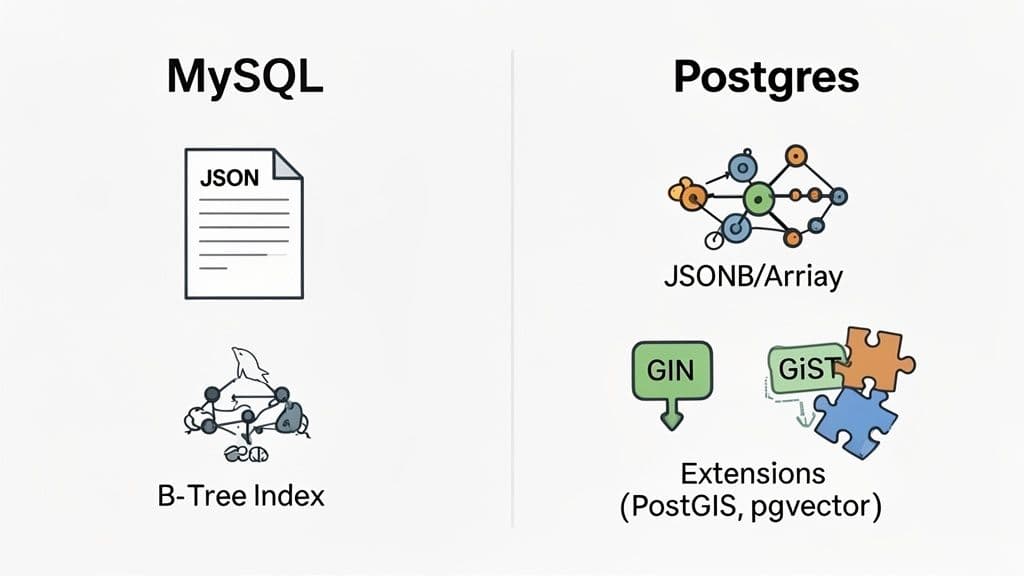

| JSON Support | Offers a JSON data type that stores data in an optimized binary format. Provides a solid set of functions for creating, manipulating, and searching documents. It’s a huge improvement over storing JSON as plain text. | Provides two types: json (stores exact text) and the more powerful jsonb (a decomposed binary format). The jsonb type can be indexed with GIN, offering massive performance gains for querying nested data. | Postgres: For applications that heavily query and manipulate complex, nested JSON documents at scale. The jsonb type and its indexing capabilities are a clear advantage here. |

| Indexing | Relies heavily on the standard B-tree index (great for most queries) and has excellent built-in full-text search. It's robust and highly optimized for common relational workloads but lacks a wide variety of specialized index types. | Offers a whole suite of specialized index types out of the box: GIN (for jsonb, arrays), GiST (for geospatial, full-text), and BRIN (for large, ordered datasets). This provides more tools for fine-tuning performance on complex data. | Postgres: For applications with diverse data types like geospatial, complex text search, or intricate JSON structures. Its specialized indexes provide more direct paths to optimization. |

| Extensibility | Its main extensibility point is the pluggable storage engine architecture. While InnoDB is standard, you can swap in engines like MyISAM (read-heavy) or Memory (temporary tables) to tailor the database's core behavior. | Boasts a rich extension ecosystem. Extensions like PostGIS (geospatial), pgvector (AI/vector search), and TimescaleDB (time-series) add entirely new, powerful capabilities directly into the database. | Postgres: For teams wanting to consolidate specialized data workloads (like GIS, time-series, or vector search) into a single, powerful database. The extension model is incredibly versatile. MySQL’s model is more about fundamental storage behavior. |

Ultimately, while MySQL provides a powerful and optimized experience for its core strengths, PostgreSQL's architecture often provides more specialized tools for developers who need to push the boundaries with complex data types and query patterns.

A Closer Look at Advanced Data Handling: JSON and Arrays

Modern apps are hungry for semi-structured data, making native JSON support a key battleground. Both databases have strong offerings, but their approaches reveal different priorities.

MySQL introduced the JSON data type, which stores documents in an efficient binary format. You get a good set of functions to create, search, and modify JSON, which is a massive leap from the old days of just stuffing it into a TEXT column.

Postgres, on the other hand, gives you two options: json and the more powerful jsonb. The jsonb type is the real star; it stores data in a decomposed binary format, removes duplicate keys, and—most importantly—can be indexed for incredibly fast queries, even on deeply nested data.

Practical Takeaway: For basic JSON storage and straightforward queries, MySQL's

JSONtype is perfectly fine. But if your application lives and breathes complex JSON that needs to be queried at scale, PostgreSQL'sjsonbis the superior tool for the job.

Imagine an e-commerce site where product specs are stored in a JSON column.

MySQL Query:

SELECT product_name

FROM products

WHERE JSON_EXTRACT(specs, '$.color') = 'blue';

PostgreSQL Query (with a GIN index):

-- First, you create an index for blazing-fast lookups

CREATE INDEX idx_products_specs ON products USING GIN (specs);

-- Now, your query is highly efficient

SELECT product_name

FROM products

WHERE specs @> '{"color": "blue"}';

That GIN (Generalized Inverted Index) on the jsonb column gives PostgreSQL a serious performance edge for these kinds of queries. Postgres also has native support for arrays of any data type, which is another powerful tool for modeling data without resorting to extra joins.

Specialized Indexing Strategies

Great indexing is the secret sauce of database performance, and this is where PostgreSQL’s extensibility really shines. Both databases use standard B-tree indexes, which are the workhorse for most equality and range queries. But Postgres goes further, offering a variety of specialized index types built for specific data challenges.

- GIN (Generalized Inverted Index): Perfect for indexing composite values, like all the keys in a

jsonbdocument or the words in a full-text search document. - GiST (Generalized Search Tree): A flexible index that powers a huge range of use cases, from geospatial queries with PostGIS to complex full-text search.

- BRIN (Block Range Index): A brilliant solution for massive tables where data has a natural order (like timestamped logs). It creates a tiny index by storing summaries for large blocks of data, making it incredibly efficient.

MySQL, especially with InnoDB, has a highly optimized B-tree implementation and solid full-text search. However, it simply doesn't have the same built-in variety of specialized indexes as Postgres. If your application deals with geospatial data, complex search, or other non-traditional data structures, Postgres gives you more specialized tools right in the core product.

Concurrency Control with MVCC

Both MySQL (using InnoDB) and PostgreSQL rely on Multi-Version Concurrency Control (MVCC) to manage simultaneous reads and writes. This is the magic that lets your application handle high traffic without readers and writers constantly blocking each other. MVCC works by giving each transaction a "snapshot" of the data, ensuring consistency.

While the core principle is the same, the mechanics differ slightly. PostgreSQL's implementation can sometimes lead to table bloat because old row versions aren't removed immediately. It depends on a cleanup process called VACUUM (which runs automatically these days) to reclaim that space.

InnoDB in MySQL handles this differently, storing old row versions in a separate "rollback segment." This approach can be more efficient at managing space, but both systems need proper tuning to perform well under heavy, continuous write loads. For most applications, both databases provide fantastic concurrency. You can learn more about how different data structures impact performance in our guide on effective database management.

A Tale of Two Extensibility Models

The way each database handles extensibility might be their most fundamental architectural difference.

MySQL's claim to fame here is its pluggable storage engine architecture. While InnoDB is the default for a reason, you can switch to other engines like MyISAM (for read-heavy, non-transactional workloads) or Memory (for caching). This lets you change the very foundation of how your tables store and access data.

PostgreSQL's approach is a vibrant extension ecosystem. Extensions are powerful add-ons that can introduce new data types, custom functions, new index types, and even entirely new capabilities. This model has given birth to some incredible tools:

- PostGIS: The gold standard for geospatial data, turning Postgres into a full-featured geographic database.

- pgvector: Adds vector similarity search, making Postgres a powerful engine for AI and machine learning applications.

- TimescaleDB: An extension that transforms Postgres into a world-class time-series database.

This framework makes PostgreSQL unbelievably versatile. It allows a single database to handle workloads that would otherwise require you to manage multiple, separate, specialized systems.

Decoding Performance for Real-World Workloads

Database performance benchmarks are a classic battleground in the MySQL vs. PostgreSQL debate, but honestly, abstract numbers can be incredibly misleading. There's a long-standing belief that MySQL is just plain faster, but we need to dig into that. While it definitely screams in certain scenarios, performance isn't a simple scorecard—it's all about your specific workload.

The truth is a lot more nuanced. MySQL earned its reputation for speed in environments hammered by simple, high-throughput reads and writes. On the other hand, PostgreSQL often shines when things get complicated, easily handling complex queries, massive datasets, and heavy concurrent use that requires more robust data management.

Write-Intensive vs. Read-Intensive Workloads

MySQL's architecture, especially its thread-per-connection model, is finely tuned for write-heavy applications. Picture a system like WordPress or any high-traffic site where thousands of users are firing off simple inserts—comments, likes, log entries. In these situations, MySQL's lean design really comes into its own.

In a pure, high-scale, write-intensive showdown, MySQL can sometimes edge out Postgres by up to 30% in raw throughput. But for most real-world applications, the performance is pretty comparable. What's far more important is this: a single missing index can cripple either database, causing a 10x to 1000x slowdown. Good optimization will always beat a default engine choice, hands down. You can find some great Postgres vs MySQL performance deep dives that explore this further.

PostgreSQL, however, really starts to pull away when complexity is the name of the game. Its query planner is significantly more advanced, making it a beast at optimizing tricky joins, subqueries, and analytical functions. This makes it the go-to for data warehousing, BI dashboards, or any app that needs to crunch huge amounts of data to find meaningful insights.

Actionable Insight: If your main performance goal is raw

INSERTstatements per second, MySQL is a very strong choice. But if your application’s real value lies in complex data analysis and sophisticated reads, PostgreSQL’s query optimizer will almost certainly serve you better.

Finding and Fixing Performance Bottlenecks

At the end of the day, the choice between MySQL and PostgreSQL is rarely the real reason for bad performance. The usual suspects are poorly designed schemas, unoptimized queries, and lazy indexing. Instead of obsessing over the engine, you'll get far more mileage by focusing your energy there.

Here is an actionable checklist for diagnosing performance problems in either system:

- Analyze Your Query Plans: Use

EXPLAIN ANALYZE(Postgres) orEXPLAIN(MySQL) before committing any complex query. Hunt for "Seq Scan" (Postgres) or "Full Table Scan" (MySQL) on large tables—they are giant red flags pointing straight to a missing or misused index. - Get Your Indexing Right: Don't stop at primary keys. Index your foreign keys and any columns that show up regularly in

WHEREclauses,JOINconditions, orORDER BYstatements. Practical example: If users often search for products bycategory_id, an index on that column is non-negotiable. - Tune Your Configuration: Both databases ship with conservative default settings. For Postgres, a good starting point is setting

shared_buffersto 25% of your system's RAM. For MySQL,innodb_buffer_pool_sizeshould be set to 50-75% of available RAM on a dedicated database server. - Use a Connection Pooler: For any production PostgreSQL deployment, use a tool like PgBouncer. It’s not optional; it’s essential. While MySQL's threading is lighter, connection pooling is still a best practice to avoid the constant overhead of setting up new connections in a high-traffic environment.

By mastering these fundamental optimization techniques, you can build a high-performance system on either MySQL or Postgres. The database engine is just the foundation; how you build on it is what truly determines the outcome.

When to Choose MySQL and When to Choose Postgres

Making the final call between MySQL and Postgres isn't about crowning a technical winner. It’s about matching the right tool to the job you actually have. The debate is settled by looking at your project's specific needs, not just a list of features. This is less about pros and cons and more about finding the right fit for your application.

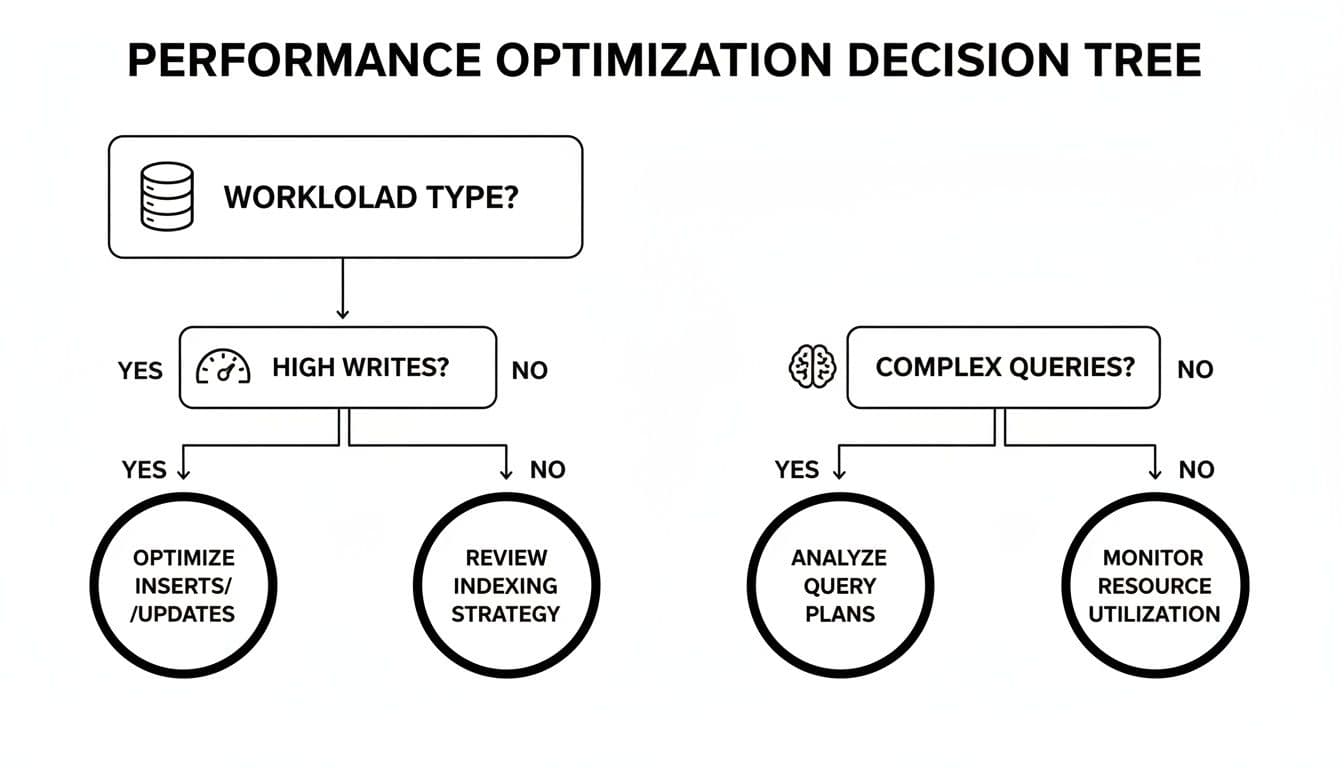

This decision tree gives you a quick visual guide for thinking through the performance trade-offs, especially when it comes to high-volume writes versus complex, analytical queries.

The core idea is this: MySQL often shines when dealing with a high velocity of simple write operations. In contrast, PostgreSQL's sophisticated query planner gives it a serious edge for deep, complex data analysis.

Choose MySQL for Web-Scale Simplicity

There’s a reason MySQL still powers a huge chunk of the web: it's a pragmatic, high-performance workhorse. Its architecture is built from the ground up for scenarios where speed, straightforwardness, and reliability are the name of the game.

MySQL is probably your best bet when your project looks like this:

- E-commerce Platforms: For a typical online store, the workload is dominated by reads (people browsing products) with occasional bursts of simple writes (people buying stuff). MySQL’s fantastic read-replication and easy setup make it a scalable and cost-effective choice for this classic use case.

- Content Management Systems (CMS): Think about applications like WordPress or Drupal. They generate a staggering volume of simple reads and writes. MySQL’s raw efficiency in handling these bread-and-butter operations makes it the battle-tested, default engine for most of the web's content.

- High-Volume Transactional APIs: If you're building a service that needs to hammer the database with a huge number of simple, independent transactions—like logging events or updating user statuses—MySQL's performance on these kinds of write-heavy workloads is a major advantage.

Actionable Insight: MySQL's strength is its operational simplicity and raw speed for well-defined, repetitive tasks. It’s the go-to when your application needs to do a few things very, very fast, over and over again.

Choose Postgres for Data Integrity and Complexity

PostgreSQL really comes into its own when the value of your application is tied to the complexity and integrity of its data. Its strict, standards-compliant nature and incredible extensibility make it a fortress for mission-critical information.

You'll want to lean on Postgres for these kinds of advanced use cases:

- Financial and Analytical Systems: When you absolutely cannot compromise on data integrity, Postgres is the safer choice. Its support for transactional DDL means schema changes are atomic, which prevents partial, broken updates during migrations. This is a must-have for financial apps where consistency is everything. On top of that, its advanced analytical functions make it a true powerhouse for data warehousing.

- Geospatial Services: Honestly, this isn't even a fair fight. The PostGIS extension transforms PostgreSQL into a full-blown geographic information system (GIS) that runs circles around most other solutions, open-source or commercial. If your app involves maps, location data, or spatial analysis, Postgres is the only real answer.

- AI and Vector Search Applications: The AI boom has created a new killer use case for PostgreSQL. With the pgvector extension, Postgres becomes a potent vector database. It can handle similarity searches for machine learning models, recommendation engines, and image recognition systems, letting you keep your operational data and vector embeddings all in one place.

Practical Example: An AI-driven e-commerce site could use pgvector to find visually similar products. A user views a blue striped shirt, and a query like SELECT product_id FROM items ORDER BY embedding <-> '[...]' LIMIT 5; instantly finds the top 5 items with similar vector embeddings. This creates a much more intuitive "shop the look" experience than basic keyword matching ever could.

Frequently Asked Questions About MySQL vs. Postgres

When you're weighing MySQL against Postgres, the conversation inevitably moves from high-level features to practical, on-the-ground questions. How hard is it to learn? What's the real story on migration? Let's tackle the common questions that come up when teams are making this call.

One of the first things people ask is about market share and popularity. While Oracle dominates the overall database market, the open-source world is a much closer race. You’ll see MySQL and SQL Server consistently in the top three of most market rankings, but PostgreSQL is always climbing. It’s highly valued for its robust feature set and flexibility.

The numbers tell an interesting story. While metrics like job postings often reflect MySQL's enormous installed base, you’ll frequently see PostgreSQL leading the pack in developer desire for new projects. That’s a trend worth watching. You can see this play out in various database popularity rankings on Statista. This dynamic gets to the heart of their different ecosystems, which shapes everything from community support to available talent.

Which Database Is Easier for Beginners to Learn?

Hands down, MySQL is more approachable for beginners. The setup is typically more straightforward, and its syntax is a bit more forgiving for basic CRUD operations. Plus, the sheer volume of tutorials and guides aimed at new web developers is just massive.

PostgreSQL, on the other hand, has a steeper learning curve. It sticks to the SQL standard much more rigorously and introduces more complex data types and concepts right from the start. While that means more to absorb upfront, it’s an investment that pays huge dividends for developers building applications that need its advanced features.

Actionable Insight: If you're a new developer building a simple blog or web app, MySQL will get you up and running faster. If your goal is to become a data scientist or build complex, data-intensive systems, learning PostgreSQL from day one is an incredibly valuable career move.

Is It Difficult to Migrate from MySQL to PostgreSQL?

The answer is: it depends. A migration can be anything from a weekend project to a multi-month ordeal, all hinging on the complexity of your application. While plenty of automated tools can handle the basic schema and data transfer, the real headaches are almost always in the details.

Here is an actionable checklist of common friction points to watch for:

- Data Type Mismatches: Little differences, like MySQL's

DATETIMEvs. Postgres'sTIMESTAMP WITH TIME ZONE, can cause subtle but significant bugs. You need a clear mapping strategy. - SQL Dialect Variations: You can't just copy-paste functions and stored procedures. Translating procedural code from MySQL’s dialect to

PL/pgSQLhas to be done manually. - Case Sensitivity: PostgreSQL is case-sensitive for identifiers by default. Queries that worked perfectly in MySQL might suddenly break after migration.

- Auto Increment Behavior: MySQL's

AUTO_INCREMENTis a column property, while Postgres usesSERIALtypes backed by sequences. The underlying mechanics are different and need to be handled carefully.

A full data audit and a dedicated, thorough testing phase are absolutely non-negotiable for any serious migration project. For more tips on managing your database, you can find effective strategies in our blog.

How Does Community and Commercial Support Compare?

Both databases boast massive, vibrant open-source communities. For just about any problem you can imagine, someone has already asked about it—and gotten an answer—on forums or Stack Overflow.

When it comes to paid support, their models diverge. MySQL is owned by Oracle, so there’s a very clear, corporate path to getting enterprise-level support contracts. PostgreSQL remains community-driven, with commercial support provided by a healthy ecosystem of third-party vendors like EDB and Crunchy Data. This gives you more choice and a different flavor of support.

Juggling different database environments doesn't have to be a headache. With TableOne, you can connect to PostgreSQL, MySQL, SQLite, and more from a single, modern desktop app. Edit data, compare schemas, and run queries with a tool built to make your daily data work fast and predictable—all for a one-time license. Try the TableOne 7-day free trial.

Authored using Outrank app